If you’re a regular reader of our blog, you may remember an article a while back about a piece of software called Cacti. It’s a nifty little web-based program that gathers information from a variety of hardware using SNMP. Cacti then presents the data in easily readable graphs.

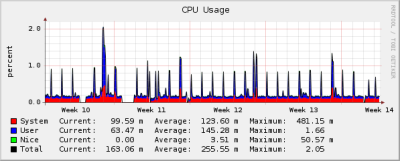

At the time of the article, I installed Cacti for one of the organizations that I administrate the IT infrastructure for. Not only did I get a better idea of the utilization of bandwidth and hardware on the servers, but I could also see how much CPU resources the workstations were consuming. Although I knew that the CPU usage on the servers was quite low, I didn’t anticipate that the CPU usage on the workstations was quite as low as it was.

The organization is quite a typical office environment with 20-some workstations running mainly our own software plus web, e-mail, word-processing and spreadsheet applications. The hardware is quite modern with CPUs ranging from 1.8 Ghz to 2.7 Ghz Celerons and RAM between 256 MB to 512 MB. All workstations are running Windows 2000 Professional. Before I installed Cacti, I thought that the CPU usage during day use would average maybe 30-40%, with some significant peaks pushing up the average. However, I was quite surprised to find out how wrong my estimate was. It turned out that the average CPU usage on these workstations was less then 10% for all machines, and less than 5% for most of the machines with only few significant spikes. It’s true that Cacti only polls information from the workstations every 5th minute, but it should still give a quite accurate value these passed months as I’ve been running it.

With this data on hand it’s quite obvious that we’ve been over-investing in hardware for the workstations. Even though I would rather overestimate a little bit than underestimate, it seems my estimates were far too high. Even so, when purchasing new workstations, we’ve pretty much bought the cheapest Celeron available from the major PC vendors, so maybe it would have been hard to adjust the purchases even with these figures on hand.

After some thinking I came up with three possible ways to deal with this problem:

1. Ignore it

I guess this is what most companies does. Maybe the feeling of being ‘future-proof’ is valued more than the fact that you have a lot of idle-time. The benefit of doing this is that you have modern hardware that is less likely to break than old hardware.

2. Buy used hardware

Most people in power would be scared of just thinking about this. However, since there is no really new low-end hardware available, this appears to be the only way. The first problem you will be facing is probably to find uniform hardware. As an administrator, you know how much easier it is to administrate 20 identical workstations, rather than 20 different workstations, both in terms of drivers and hardware maintenance.

The second problem I came thinking of is the reliability problem. Obviously 5-10 years old hardware is more likely to break than brand new hardware, and if it does, there is no warranty to cover it. However, if you buy used hardware your budget will likely afford you to buy a couple of replacement computers.

There are also security implications of buying used computers. Every modern company with an intelligent IT staff is really concerned about security, both software and hardware. If you buy used hardware, there is a chance that it might be compromised (hardware sniffers etc.) I guess the only way to deal with this problem is to carefully physically inspect all the hardware you purchase.

If you or your company do choose to buy used hardware, there are plenty of sources to do so. One of the more interesting pages I found was a company in Australia, called Green PC, which sells a variety of computers and peripherals for a reasonable price.

3. Donate idle-time (to internal or external use)

With the rise of clusters, distributed computing and virtualization, there are today plenty of ways to put idle-time to good use. One of the more famous projects that deal with this is Folding@Home, which is a project at Stanford University that uses the participants’ idle-CPU/GPU time to do medical research. More recently a project at Berkeley called BOINC created a program that lets the user choose between a variety of distributed computing projects within the same application. By participating in such project, the company will create positive publicity (if the participation is significant).

If your company isn’t interested in donating idle-time to charity/research, they might still be able to use the idle-time. If all your workstations are connected with a high-speed connection (preferably gigabit), you might be able to use the computers in a virtualization environment. However, this is doable in theory, but I don’t know how well this would work in reality. Another alternative might be to use the idle-time to internal computations. If your company is in the software-business, distributed compiling might be one way to use the CPUs more efficiently. If this is not interesting, there are plenty of distributed computing solutions that might be used for intranets for various calculations that the company might else use a 3rd party company to compute.

Hopefully you have a better idea of how you can use your idle-time more efficiently, but you should be careful though. Some people argue that today’s computers are not built to run at 100% utilization 24/7. This is a very valid point, since neither the components on the motherboard, nor the fans is likely to stand a 100% utilization for very long without breaking. Therefore it is recommended to try to find an optimal distribution algorithm that spreads the calculation over the nodes without pushing the individual workstations until they break. I have to admit that I don’t have any available data on how the life-time of the workstations will be affected by running these type of softwares, but I would guess that it will have some impact on the life-time.

To round up this article, I would like to discuss one question that is highly relevant: “Why are there no low-end, cheap computers available?”

If you go to Dell or HP’s homepage and look for their cheapest ‘office’ hardware, it’s still far more than what is required for most office use. So why is it this way? As I see it, there are several reasons for this, involving both the software and hardware manufacturers in a mutual effort to stimulate sales. Obviously, the hardware manufacturers want us to replace our computers as often as possible, since this is how they make their profits. The software manufacturers on the other hand, want to sell new versions of their softwares by implementing new fancy features, that is unlikely to add to productivity, but requires hardware upgrades to run properly.

Let’s say you’re onboard with my ideas, and that you decide to look for cheaper hardware, but still feel like used hardware is too risky. One possibility might be to go for some kind of ITX-solution. These comes with a less powerful CPU, and often includes everything you need for desktop usage, but costs less than regular computers. One benefit of using ITX boxes is that they are very tiny and light, which makes them cheap to store and ship internally.

Author: Viktor Petersson Tags: Business