Our email transfer service YippieMove is essentially software as a service. The customer pays us to run some custom software on fast machines with a lot of bandwidth. We initially picked VMware virtualization technology for our back-end deployment because we desired to isolate individual runs, to simplify maintenance and to make scaling dead easy. VMware was ultimately proven to be the wrong choice for these requirements.

Ever since the launch over a year ago we used VMware Server 1 for instantiating the YippieMove back-end software. For that year performance was not a huge concern because there were many other things we were prioritizing on for YippieMove ’09. Then, towards the end of development we began doing performance work. We switched from a data storage model best described as “a huge pile of files” to a much cleaner sqlite3 design. The reason for this was technical: the email mover process opened so many files at the same time that we’d hit various limits on simultaneously open file descriptors. While running sqlite over NFS posed its own set of challenges, they were not as insurmountable as juggling hundreds of thousands of files in a single folder.

The new sqlite3 system worked great in testing – and then promptly bogged down on the production virtual machines.

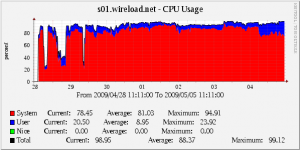

We had heard before that I/O performance and disk performance are the weaknesses of virtualization but we thought we could work around that by putting the job databases on an NFS export from a non virtualized server. Instead the slowness we saw blew our minds. The core servers spent a constant 70% of CPU time with system tasks and despite an uninterrupted 100% CPU usage we could not transfer more than 400KBit/s worth of IMAP traffic per physical machine. This was off by a magnitude from our expected throughput.

Obviously something was wrong. We doubled the amount of memory per server, we quadrupled sqlite’s internal buffers, we turned off sqlite auto-vacuuming, we turned off synchronization, we added more database indexes. These things helped but not enough. We twiddled endlessly with NFS block sizes but that gave nothing. We were confused. Certainly we had expected a performance difference between running our software in a VM compared to running on the metal, but that it could be as much as 10X was a wake-up call.

At this point we realized that no amount of tweaking was likely to get our new sqlite3 version out of its performance hole. The raw performance just wasn’t there. We suspected at least part of the problem was that we were running FreeBSD guests in VMware. We checked that we were using the right network card driver (yes we were). We checked the OS version – 7.1, yep that one was supposedly the best you could get for VMware. We tuned various sysctl values according to guides we found online. Nothing helped.

We had the ability to switch to a more VM friendly client OS such as Ubuntu and hope it would improve performance. But what if that wouldn’t resolve the situation? That’s when FreeBSD jails came up.

Jails are a sort of lightweight virtualization technique available on the FreeBSD platform. They are like a chroot environment on steroids where not only the file system is isolated out but individual processes are confined to a virtual environment – like a virtual machine without the machine part. The host and the jails use the same hardware but the operating system puts a clever disguise on the hardware resources to make the jail seem like its own isolated system.

Since nobody could think of an argument against using jails we gave them a shot. Jails feature all the things we wanted to get out of VMware virtualization:

- Ease of management: you can pack up a whole jail and duplicate it easily

- Isolation: you can reboot a jail if you have to without affecting the rest of the machine

- Simple scaling: it’s easy to give a new instance an IP and get it going

At the same time jails don’t come with half the memory overhead. And theoretically IO performance should be a lot better since there was no emulated harddrive.

And sure enough, system CPU usage dropped by half. That CPU time was immediately put to good use by our software. And so even that we still ran at 100% CPU usage overall throughput was much higher – up to 2.5MBit/s. Sure there was still space for us to get closer to the theoretical maximum performance but now we were in the right ballpark at least.

More expensive versions of VMware offer process migration and better resource pooling, something we’ll be keen to look into when we grow. It’s very likely our VMware setup had some problems, and perhaps they could have been resolved by using fancier VMware software or porting our software to run in Ubuntu (which would be fairly easy). But why cross the river for water? For our needs today the answer was right in front of us in FreeBSD: jails offer a much more lightweight virtualization solution and in this particular case it was a smash hit performance win.

Author: Alexander Ljungberg Tags: FreeBSD, performance, SQLite, virtual machines, VMware, YippieMove

Call this a “me too” for Solaris Containers. And OpenSolaris is free.

Containers (or “zones”) are easy to use, scale, fast, and actuall fun. Really. Fun to play around with.

Just my $0.02. Seeya!

Wow. Groundbreaking stuff here. A company running out of its basement and a few colo racks sets something up that’s an ugly hack and then sets it up the right way and tries to pat themselves on the back and splash mud in a major corporation’s face.

While I will be the first to admit BSD jails and Solaris zones are a great product VMware ESX and ESXi are great products as well. They all have pluses and minuses and are better suited for certain tasks. These are tools not religions.

Maybe you should relax a bit, there, “John.”

It’s part of the power and beauty of the Internet that people can start and run businesses cheaply. That’s how HP (and countless other successful businesses) started — literally out of a garage in HP’s case.

The author of this article didn’t say VMware was a bad product or that Jails were even good ones. There wasn’t any mud slinging going on there either. He was just chronicling his experience and he definitely wasn’t religious about it.

Mike

Mike,

Vmware Server is a package designed for doing VM’s on a desktop. Your article is titled Virtual Failure. The failure is on your part for picking the wrong version of VMware. Perhaps you should pick another title for your article so it seems less like your trying to blame VMware for YOUR bad design decision. If this article was written in a neutral way people would not be commenting in the way they are. You have accused a few people of trolling when in fact the trolling was done in a more subtle way by the way this article was written.

It seems you haven’t been reading very carefully.

I didn’t write this article. It looks like the author’s name is Alexander and he didn’t blame VMware. He even said the problem might not be VMware and that it’s “very likely” his set-up had problems. The author even goes so far as to say they will be “keen to look at” enterprise versions of VMware when his organization grows but, at least for now, BSD jails work well.

The oddest thing to me about your comments, John, is that you seem really emotional about this and upset at the guy who wrote this article because you feel he disparaged VMware — something I’m telling you I don’t see but who cares if he did anyway?? What difference would that make to you or anyone else? Maybe he could have picked a more accurate title but again, big deal.

Mike

Right you are. I skimmed right over your defensive babble and assumed you were the author.

It seems however you are the person that really needs to relax here. Yes I am critical of the authors half baked initial architecture and then his biased blog article based on misinformation and FUD. Why does it matter so much to you though is the real question here.

You know, John,

Your comments don’t bring anything knew. You just wasting our time, as opposite to the author of the quite good article.

You even embarrassed yourself saying, that “Vmware Server is a package designed for doing VM’s on a desktop”.

Tell it to HP for example. They sell it with HP blades.

Thank you, bye, bye.

Just the fact that you’re using FreeBSD in a jail is enough to make my day. I’ve been doing this for the last 9 years. I’m glad I’m no longer alone.

Did you check how much “system time” was spent in kernel calls versus I/O waits?

Knowing this, alone, would have drastically changed how you went about determining your problem. I would have been interested in seeing benchmarks of other virtual machines running other OS’es, to see if they were similarly capped on network I/O speed. That, and I would want to see benchmarks internal to the VM’s themselves — How fast they can FTP a file to themselves over a loopback, for example. This would give you a view of the problem with the network part of the equation removed entirely.

My guess is, VMWare wasn’t at fault. Without seeing native I/O benchmarks of the FreeBSD box you deployed it on, (i.e. benchmarks OUTSIDE of VMWare) any determination that VMWare is the culprit can’t be unsubstantiated.

The choice of putting VMWare on FreeBSD is a little funny to begin with, anyway. :) You don’t cross a river for water, true, but you dont grow watermelons in sand either. :)

Cheers,

Bowie

Bowie,

We did run a couple of simple internal benchmarks like dd’ing a file to local disk or to the NFS share. Then we compared those results to running the same commands directly on the host servers. I think the tests were a little too simple to draw any conclusions, but IO performance inside of the VM was basically in single digits MiB/s and a very standard 40-50 MiB/s on the host.

I agree that VMware itself may not have been the culprit, but rather our combination of host (Ubuntu) and client (FreeBSD) and the IO behavior of our software.

oops, “can’t be unsubstantiated” should be “can’t be substantiated.” above.

Matt Hamilton:

“We had loads of problems running FreeBSD (6.x IIRC) on VMWare. Mainly due to clock skew issues. The clock was loosing time in the order of 10s of minutes per hour. We tried tweaking various sysctls but to no avail.”

1. vi /boot/loader.conf

2. Add/modify kern.hz=”100″.

3. Reboot

May your problems be nonexistant. :-)

Needless to say, my 3-4 FreeBSD guests (6.2) keep time like a regular dedicated box.

Tom,

The kern.hz value on all guests was set to 100Mhz. That was one of the first optimizations we did.

vmware has several issues:

1) Keeping time in a Nix environment (Linux). It has been getting better.

2) Kernel updates remove driver(s); ok more of a linux issue.

3) fstab sometimes gets borked when re-installting drivers.\

4) Too many other issues with vmware; I could go on.. but I try to avoid this beast when ever possible.

PS: Newer versions of the kernel give a place for binary blobs and that should help. Well, help in the future not with current RH4 and 5 offerings.

not to piss on your FreeBSD jail parade, or the unix/linux ppl here, but i never had these types of performance problems… and good or bad, i was running vmware out of a win2000 (i know not supported) and win2003 enviro.. and hosting other win2000/2003 term servers… the company i was working for didn’t wanna pay for term serv. license and had me refresh the grace period (i.e. rebuild) every 80 days, and their custom vb app had to be copied over from the old image to the new image (more unhealthy programming) during this copy process from Vm -> Vm, i was looking at the theorical max for transfer rate, when copying from Vmserver1_Vmimage1 -> Vmserver2_Vmimage 2 (i.e server to server) i hit the theoretical max each time… i’m not really sure what to say here other than, it worked great for me in windows of all places… i, um… i dunno.

Why did you select VMware Server, instead of VMware ESX or ESXi. ESX and ESXi are “bare metal hypervisors” and will run circles around VMware Server. I’d only recommend VMware Server for development/testing or running live demos on a laptop for presentations and such.

I agree with couch and jds.. Full-blown ESX (now VSphere), albeit pricey, is the real McCoy. Solaris containers (zones) are key and absolutely free in x86 and Sparc solaris. You could also try Virtuozzo from Parallels Software – functionally similar to BSD Jails and Solaris containers, however with real manufacturer support for a reasonable price.

Flat files? DB over NFS? FreeBSD on VMware? Hire an architect. Holy cow.

I’m kind of surprised they are so willing to discuss this where customers can see. How many restaurants would blog about their frozen food and roaches in the kitchen?

Congratulations on finding the right solution for you–while VMware is a great solution for many organizations, it is not the right fit for all organizations.

I would like to point out though, that you were using the wrong VMware product if performance was your goal. VMware Server, as a Type 2 hypervisor (one that requires a host operating system underneath it) simply cannot provide the same level of performance as a Type 1 bare metal hypervisor such as VMware ESX or VMware ESXi.

Good luck!

Has it occurred to you that your app is shit?

You changed from one major revision of your app to another, including changes with even more than the usual obvious implication for performance. Yet you did not performance-test the app adequately before putting it into production.

When you realized this, instead of going back and doing more performance-testing of the app, you tried to blame a hazy layer of virtual buzzwords.

I’m guessing you also didn’t give yourself a reasonable path to migrate back to the old version of your app, either. I’m guessing the lack of a way back is why you stuck your heads in the sand w.r.t. evaluating your own software’s bad architecture and tried to “cloud” the issue with all this masturbation about virtual bullshit.

I’m starting to feel like the less I have to do with this world, the better.

By “this world,” I suppose I mean the world in which it’s a common, reasonable problem for a person to have, “help! I want to move my mail from one IMAP account to another.” If I want to move mail from one place to another, I do it in mbox or Maildir or MH format, because I don’t use these testicle-shrinking AOLeet webmail services.

The FAIL starts there, but it seems to spiral outwards to affect all levels of your business with flailing cluelessness.

I know you are trolling but I’ll set the record straight for our more sensible readers.

You are, as one would expect, wrong on all counts. We performance tested the application, we had a way to migrate back and we’re not trying to ‘cloud’ any issues – we’re just sharing a problem we had and what solution worked for us.

The performance was abysmal on the VMs but we had enough servers to get by anyhow until the performance problem was fixed. The cost of bad performance for a short time was smaller than the cost of not having all the new features on the market.

Moving mboxes is a great solution. Naturally it is impossible for the majority of our customers as they are moving between different hosted solutions – a Zimbra setup, Mobile Me, Google Apps, what have you.

That’s the real world we live in.

I’m not trolling any more than the others who had issue with how your situation unfolded.

And as I said, there’s no “we” about it. It’s most certainly not the world I live in. In the world I live, if you performance-test an app and then find that it doesn’t perform well in production, that means your performance testing wasn’t good enough.

I don’t think the steps you took are so unreasonable, but it seems like your way of viewing your entire situation, while not uncommon these days nor necessarily unworkable, is substantially different from an older and still relevant camp of industry geeks, and that the difference gives you a frightening blind spot to the biggest chunk of FAIL in your whole operation—your lack of insight into your app’s performance character and the reasons for it.

However you’ve not invited me to “live blog” about anything nor censored my comments, and that basic courtesy is more than I’m accustomed to. so, thank you! At least we can still talk.

It may be true that if we had been more meticulous with our performance testing there would have been less of a surprise. It’s certainly true that we are learning as we go regarding our application’s particular performance character, as opposed to how the old guard would do it.

Running a startup is a balancing act: do you go with agile release soon, release often mantra or do you work your goods to perfection before taking the wraps off? We choose the former and sometimes we pay in the currency of crazy overloaded servers and late nights in the office. But as long as the customer experience is not impacted we are okay with that. The win is in time to market and flexibility.

Sydney,

It seems like you have never had opportunity working with huge systems scaling with milions of users accounts. Eitherway you wouldn’be writing about performance tests in this way.

Tell google, youtube, etc. that they should have test their infrastructure before releasing theirs products.

You are trolling.

I certainly won’t argue with success. I will say, though, that after seeing what it did to Firefox 3 I’d be really reluctant to use sqlite for anything performance-critical.

Orv, you may be comparing apples and oranges.

I wouldn’t dismiss SQLlite simply because it might cause poor performance within Firefox.

Using SQLlite within Firefox 3 will probably exhibit completely different behavior then using SQLlite within a server-based application.

There are also numerous variables which can impact the performance of the software.

-= Stefan

When we were working on getting SQLite to work with out app we did see several postings about file locking errors with SQLite when running over NFS.

But once they were resolved SQLite was working great for us. For us SQLite was a ‘keep it simple’ solution, especially since we wanted to have separate databases for each job – jobs are entirely independent so by having independent databases too we make parallelism as easy as its supposed to be. SQLite also makes it easy to move the database around, compress it when it’s not in use, etc. And performance has been good after some tuning.

My only complaint so far is about database vacuuming. The VACUUM command takes forever to run and autovacuum eats into performance too much. A lightweight autovacuum that could run whenever X rows have been deleted would be great.

Not to mention fancier VMWare versions hit on the wallet like crazy, on the contrary FreeBSD Jails (or XEN, or OpenVZ, or Solaris Zones) are in fact for free.

Impressive. Your amateurish design is a great selling point to potential customers. Your using jails now which is better but why can’t your application handle this without the overhead of running entire OS instances?

Ted,

The reasons we virtualize are mentioned in the article, and include stronger compartmentalization and ease of deployment. And as you point our yourself, with jails the overhead is minimal.

Spending that time writing an application that was able to handle multiple customers and make good use of CPU and network resources on the single system would have been a better use of your time.

Have you also considered that this blog article does not exactly paint your company in a good light? While bad low level architectural design is commonplace in the industry most companies don’t tell the world about their mistakes in their blog.

Ted,

Sharing mistakes and successes on a blog is more common than you might think. Look at companies like Twitter and last.fm. In fact, some might say that’s the point of a blog – if it was just a PR team newsroll with no personal reflections it’d be just marketing.

In developing this application we drew from the experience of hundreds of other 2.0 companies by reading blog posts and articles. By sharing our own experiences with making our software solution better we are giving back to that community.

At the end of the article our software runs great, and we certainly don’t mind our customers knowing that.

Alexander,

Good point. While most companies like to downplay issues being open develops a sense of trust with your customers.

This article doesn’t provide a good comparison between VMware and FreeBSD Jails, and the headline is a little sensational.

FreeBSD Jails is a great tool, and will hopefully provide a great solution here.

However, it’s too bad you didn’t try to a solution like VMware ESXi (which has a free license) first.

Instead of spending time troubleshooting and tweaking all those tuning variables, it might have been simpler to try and migrate the systems to ESX first. This might have saved alot of time and effort. And comparing the results of VMware server vs. VMware ESXi would have been interesting to see.

From what I understand, it’s pretty easy to migrate VMware Server images from VMware server to ESX.

Part of this is VMware’s fault– they have a confusing array of products. Did they just rename VMware ESX to VSphere? How is VSphere related ESXi?

-= Stefan

Stefan,

You’d be right not to see this article as a side by side review of VMware and Jails – it’s more a sharing of our experience: what we did, what problem we ran into and what worked for us.

I think chances are good we’ll give VMware ESXi a shot down the road. It’ll be interesting to see how that affects the IO throughput.

I belive VSphere replaces Virtual Center, not ESX.

ESX 4.0 has been release along side VSphere.

For people using Linux you can also look at http://linux-vserver.org/

OpenVZ is another possible solution for Linux, like linux-vserver: http://wiki.openvz.org/Main_Page

It’s not clearly stated but it is inferred in your article that you may be using an sqlite database over NFS from multiple different hosts?

If that is the case, that might not be the best way to go with any kind of significant database writes happening. Multiple hosts locking/unlocking an sqlite database file constantly does not sound good.

I like sqlite and its performance is very nice for simpler things where one process on one machine is utilizing the sqlite database on its local disk, but if I were getting into using the database from multiple machines simultaneously I would start using a database server instead.

If you’ve switched from a mess of flat files to sqlite you’re already most of the way there, you’ve already SQL-ized your app. Pick your favorite SQL server and your journey towards the dark side will be complete. I know it’s a bit boring and common to just slap pgsql or mysql on a server and start using it but… it sounds like a very logical step up from sqlite with locking.

Badly-written overreaching SQL queries being executed often and lack of I/O performance are two real common things to watch out for when you go to use a regular old database server.

If your SQL load is significant and you are concerned about its performance there are plenty of ways to handle that… If your database fits in RAM you are probably good to go. If you’re up to the tens of gigs, RELENG_7 also has ZFS v13 which supports cache devices. I’m using it, I can tell you that just having a 32GB SATA SSD cache device assigned to your zpool is amazing. I am quite curious to test how mysqld databases cached on the SSD will perform.

Hi tog,

Excellent suggestion – our situation isn’t quite like that though. We actually have lots of different sqlite databases, one per transfer job we do. And those guys are only accessed by one host at a time, the one currently working that account. For ‘the big database’ which is accessed by lots of hosts we use Postgres today.

I’m really interested to hear about your success with ZFS and cache devices. We have been looking at ZFS for quite some time, but haven’t dared to take the plunge since it’s fairly new. What performance boost did you get with that SSD hooked up? Sounds like a fantastic setup.

My god, why the venom? Are these commenters vmware shills? They made a design change, so what? I don’t get the anger, and viciousness… weird. I’m not sure why you’d pay for VMWare when you could be using FreeBSD Jails or Xen hypervisors, or some other $0 licence fee solution, no way I would have done it but.. Wow, so nasty… so unecessarily nasty.