Our email transfer service YippieMove is essentially software as a service. The customer pays us to run some custom software on fast machines with a lot of bandwidth. We initially picked VMware virtualization technology for our back-end deployment because we desired to isolate individual runs, to simplify maintenance and to make scaling dead easy. VMware was ultimately proven to be the wrong choice for these requirements.

Ever since the launch over a year ago we used VMware Server 1 for instantiating the YippieMove back-end software. For that year performance was not a huge concern because there were many other things we were prioritizing on for YippieMove ’09. Then, towards the end of development we began doing performance work. We switched from a data storage model best described as “a huge pile of files” to a much cleaner sqlite3 design. The reason for this was technical: the email mover process opened so many files at the same time that we’d hit various limits on simultaneously open file descriptors. While running sqlite over NFS posed its own set of challenges, they were not as insurmountable as juggling hundreds of thousands of files in a single folder.

The new sqlite3 system worked great in testing – and then promptly bogged down on the production virtual machines.

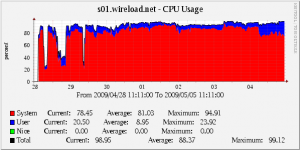

We had heard before that I/O performance and disk performance are the weaknesses of virtualization but we thought we could work around that by putting the job databases on an NFS export from a non virtualized server. Instead the slowness we saw blew our minds. The core servers spent a constant 70% of CPU time with system tasks and despite an uninterrupted 100% CPU usage we could not transfer more than 400KBit/s worth of IMAP traffic per physical machine. This was off by a magnitude from our expected throughput.

Obviously something was wrong. We doubled the amount of memory per server, we quadrupled sqlite’s internal buffers, we turned off sqlite auto-vacuuming, we turned off synchronization, we added more database indexes. These things helped but not enough. We twiddled endlessly with NFS block sizes but that gave nothing. We were confused. Certainly we had expected a performance difference between running our software in a VM compared to running on the metal, but that it could be as much as 10X was a wake-up call.

At this point we realized that no amount of tweaking was likely to get our new sqlite3 version out of its performance hole. The raw performance just wasn’t there. We suspected at least part of the problem was that we were running FreeBSD guests in VMware. We checked that we were using the right network card driver (yes we were). We checked the OS version – 7.1, yep that one was supposedly the best you could get for VMware. We tuned various sysctl values according to guides we found online. Nothing helped.

We had the ability to switch to a more VM friendly client OS such as Ubuntu and hope it would improve performance. But what if that wouldn’t resolve the situation? That’s when FreeBSD jails came up.

Jails are a sort of lightweight virtualization technique available on the FreeBSD platform. They are like a chroot environment on steroids where not only the file system is isolated out but individual processes are confined to a virtual environment – like a virtual machine without the machine part. The host and the jails use the same hardware but the operating system puts a clever disguise on the hardware resources to make the jail seem like its own isolated system.

Since nobody could think of an argument against using jails we gave them a shot. Jails feature all the things we wanted to get out of VMware virtualization:

- Ease of management: you can pack up a whole jail and duplicate it easily

- Isolation: you can reboot a jail if you have to without affecting the rest of the machine

- Simple scaling: it’s easy to give a new instance an IP and get it going

At the same time jails don’t come with half the memory overhead. And theoretically IO performance should be a lot better since there was no emulated harddrive.

And sure enough, system CPU usage dropped by half. That CPU time was immediately put to good use by our software. And so even that we still ran at 100% CPU usage overall throughput was much higher – up to 2.5MBit/s. Sure there was still space for us to get closer to the theoretical maximum performance but now we were in the right ballpark at least.

More expensive versions of VMware offer process migration and better resource pooling, something we’ll be keen to look into when we grow. It’s very likely our VMware setup had some problems, and perhaps they could have been resolved by using fancier VMware software or porting our software to run in Ubuntu (which would be fairly easy). But why cross the river for water? For our needs today the answer was right in front of us in FreeBSD: jails offer a much more lightweight virtualization solution and in this particular case it was a smash hit performance win.

Author: Alexander Ljungberg Tags: FreeBSD, performance, SQLite, virtual machines, VMware, YippieMove

Thanks for posting this, I had a very similar experience and thought that I was the only one that had problems with some of the vmware products.

http://ivoras.sharanet.org/freebsd/vmware.html

FreeBSD is notoriously slow under VMWare without some tweaking I had similar experiences until changing the kern.hz in /boot/loader.conf down to 100. After doing this, compile times dropped insanely. That being said, running a database over NFS will *always* be slow – you need to use pre-allocated disks/dedicated partitions.

That being said a jailed-type environment like FreeBSD’s jails or virtuozzo will always be faster than full virtualization with VMWare or xen.

How does this compare to ESXi, and why haven’t you given that a shot?

This is a classic example of “know your workload before picking the technology.”.

What you ran into was the fact that VMWare offers a full x86 emulation layer. In hypervisors that emulate that much, any syscalls (of which IO is the majority) are the most expensive. Systems that are hypervisor-ish (like BSD jails), or paravirtualized systems don’t have as much problems daeling with syscalls. In fact, newer versions of VMWare support paravirtualiztion, which sill take IO like yours and speed it up tremendously. It’s called VMI, and also needs a Linux kernel equipped with paravirt-ops to run successfully.

However, when you have control of the environment and are just trying to maximize performance, a less invasive option like BSD jails and the ilk are better options anyway.

I’ve got a ton of VMWare experience and from what I’ve found, VMWare Server is not your best bet for any kind of “production” virtualization setup–primarily because it stores your VM images on the filesystem which requires 2 layers of system calls to read data in the virtualized environment.

I’ve found the ESX is a far better solution. Since VMWare freed up ESXi 3.5, I’ve replaced 6 VMWare Server installations. Too bad it requires such serious hardware and with multiple ESX installs, managing without VirtualCenter (or whatever they’re calling it now) is a PITA.

I’ve read some good things about Xen, but haven’t done much with it on anything more than single-core P4s. (EC2 doesn’t count)

We had a similar experience. VMWare ESX carried quite a bit of overhead in our environment too. We went to linux vservers (similar to bsd jails, openvz, solaris containers et al.). Our performance gains were at least an order of magnitude.

I see you mentioned VMware Server 1; have you considered/tried VMWare ESXi Server 3.5 / 4.0? Their product advertising describes lots of performances improvements over the previous version(s).

VMware server wasn’t the right choice, that’s likely where a good portion of your problem started. By putting VMware on top of another OS (either Windows or Linux) you’re already introducing another bottleneck in the performance pipeline. ESXi is free and would’ve given you much better performance for the still cheap price of $0.00. This is especially true if your software can run on a Linux distro that supports paravirtualization, you’d get near native performance on top of the hardware.

Wow, you guys are definitely having issues. I believe the polite term for the problems you’re having is pebkac..

Little suggestion for ya… Hire someone with the appropriate experience for what you’re trying to do and stop winging it on your own.

Sounds like the company tried to take the cheap way out and it surprised they had poor performance. *SHOCKER!*

Try ESX and a fiber attached SAN.. your results will differ. What type of SAN did you guy use anyway? I’m guessing you probably picked it up at Radio Shack or perhaps a local yard sell?

Summary Off Article: “QQ QQ QQ.. we setup a cheap network and had bad performance. Obviously it’s someone elses fault. But fear not.. we found a hack to get us by until we the next problem. Paying for quality if for suckers. Gimmie free stuff!”

Right. Yeah, high-end hardware is the only way to go. Ask Google.

past time jails were not allowed to use multiple ip address, now it changed and I think it is a true evolution of the systems for the ones that appreciate simpleness and beauty

Why on earth would anybody choose to run a production site with DB load on sqlite? It seems like a terrible choice or am I missing something?

We are not running the main database in SQLite. Rather, each individual job in progress has its own little database in addition to the main database. SQLite works well here since there is no need for concurrent access.

The main database, a postgres setup, is run without any virtualization.

Jails are quite nice, but i’ve never found a good managing program for them. Did you use any or did you do all of the copying and creating of userlands etc by hand?

I’ve played a bit with containers on solaris/opensolaris, which is often described as ‘jails on steroids’, and they’re also very nice.

But I guess that the effort of porting your software to another OS is probably greater than the gain of easier management.

One issue I’ve run into with VMWare Workstation (I’m not sure if it applies to Server as well) is that it seems to use virtual memory on the hard drive even when you set it not to.

It creates a file in /tmp that’s the same size as the VM’s RAM, then deletes it but keeps using it. That made this problem hard to diagnose, as there’s no way to make the hidden/delete file show up in directory listings, but I think lsof can see it.

The solution was to bind mount /tmp into /dev/shm — then the virtual memory is in RAM. IO went from dead slow to near native when I did that.

I’m sure there’s a better way to fix this bug, and I’d be interested if anyone’s found it. (Admittedly haven’t looked at the VMWare forums in a while; maybe it’s fixed now.)

This workload pattern looks ideal for Sun Solaris zones…

Theres a nice little article here that describes how XenServer was eventually used to replace VMware for certain workloads after delivering 2x the concurrent user performance.

N.

There are a couple ouf serious bugs regarding disk performance in FreeBSD 7.1. See http://www.freebsd.org/releases/7.2R/relnotes.html (ciss driver) and http://unix.derkeiler.com/Mailing-Lists/FreeBSD/hackers/2009-05/msg00001.html. Upgrading to 7.2 should help.

you’ve got an interrupt problem. probably clash.

This article is a fairly deeply flawed comparison of VMware and BSD jails, for these reasons:

1. VMware Server is not really intended for serious production use. Its always been marketed primarily as a product for use in development and testing environments, and for small scale users new to virtualization. VMware VI (and now VSphere) are intended for production use within a datacenter, but they weren’t being used here.

2. No information on the host machine is given at all. If you run any VMware product on a Pentium 4 with IDE hard disks, you’re going to see pretty bad performance compared to, say, a Xeon and a Fibre Channel SAN.

To really understand performance in a virtualized environment, you need to know the CPU, the amount of RAM, the size and type of disks on the storage system, and other details, to give you a sense as to the IOPS that the underlying hardware is theoretically capable of.

Also, further performance enhancements on SANs can be gleaned through disk alignment and other methods not immediately obvious to a system administrator inexperienced in storage or hypervisor engineering.

Furthermore, since Server 1.0 was in use here, it would also be helpful to know what the host OS was. A number of less talented users run Server 1 on unsupported platforms, like Windows XP or recent Ubuntu releases, in many cases with third party kernel patches. These systems cannot provide any objective sense at all as to what VMware is capable of in terms of I/O or CPU performance.

3. The real WTf here is that such a substantial amount of work was spent tweaking and improving performance of the app, while continuing to run it on a single Server 1 system, during a timeframe that occured after the release of both ESXi and Server 2 (which can both offer superior performance to Server 1).

The amount of time and money wasted there could easily have been used to buy a modern, fast server with fast SAS disks (or better yet, redundant NAS or SAN storage) and free ESXi, and performance would doubtless have geniunely improved.

You cannot make a silk purse from a sow’s ear, and I very much suspect that was the case here in terms of initial infrastructure.

I could be wrong. I’ll happily eat some crow tempura if it turns out the authors were running Server 1 on a fully supported host OS (such as RHEL 4) on a blazing fast server with 15k RPM FC disks etc.

In general though, in production environments, VMware’s overhead is extremely low, and is, at worst, only slighlty higher than the overhead you see associated with solutions like FreeBSD jails, Solaris Zones and Virtuozzo containers.

“it’s” is not “its”. Please learn to use the apostrophe properly.

Well …

Let’s recap the workload:

– Heavy DB I/O with overhead storage

– Processor intensive

– Filesystem intensive

– Network intensive

As others pointed out, you could not have picked a worse contender than VMware for those kind of workload.

And ESXi or ESX server would not have save the day as:

– They need beefier expensive hardware if you really going to enjoy those 15% less overhead

– They need to drop an extra 10/20 K to get the management extra. Nobody manges ESXi (the supposedly free hypervisor) without VMware Management console, so it is a fake freebee.

The answer is easy: lightweight virt that is easy on I/O intensive apps, particularly with mail servers where the I/O triggers some large filesystem pressure. So FreeBSD jails is one, so is Linux LVS, so is paravirt Xen, so is OpenVZ. Note that doing sqlite on NFS has not the issues you mentions, but also bring even more filesystem stress and pressure than anything else. I would say this is the culprit, i bet the moment your sqlite3 dbs are on local filesystem, performance will double.

We had loads of problems running FreeBSD (6.x IIRC) on VMWare. Mainly due to clock skew issues. The clock was loosing time in the order of 10s of minutes per hour. We tried tweaking various sysctls but to no avail.

In the end we moved to KVM for the virtualisation platform and FreeBSD is much happier running on top of that. At the time ESX was still expensive, so we didn’t give that a try.

-Matt

OpenVZ on your favourite linux distribution of choice is an equivalent of FreeBSD jails. However i believe (could be wrong) that it implements the ability to restrict resources on each ‘vm’.

Its trivial to set up, just get a kernel for your rhel like os from openvz or use the openvz kernels that come with debian. The openvz site includes templates of most major distributions. I had a vm going with no prior experience in under 15 mins.

But go FreeBSD :)

I’ve had a chance to work with different SQL servers (Informix, Oracle, PG, MSSQL, etc) on several different platforms (Linux, BSD, Dec Unix, Solaris, Windows). When I ran into SQLite, I was originally intrigged by a small overhead. However, I quickly realised that SQLite isn’t good enough for anything but small configuration databases.

The major SQLite problems are:

– very poor DML performance

– very limited SQL language

– very limited subset of database structures

– no support for online backup

– very bad access concurency

In practically every production situation, you better off with PostgreSQL or MySQL. The performance benefits are huge. And if you’re trying to do anything with SQL database – get someone who understands SQL database optimisations.

We run Postgres for our concurrent access database needs. The SQLite databases are per job to maintain data on all the in flight email without opening up loads of files. Performance has been very good when run on regular harddrives.

“(…) we used VMware Server 1 (…)”

As you guys already mentioned, the VMware Server 1.0 was a damn wrong choice… you really make it wrong.

You should try ESXi 4.0 (it’s free too).

Interesting, can you answer this for me, have you experienced this under a different virtualization software such as virtualbox?

Tom,

Virtualbox does not offer any server version as far as I know.

The biggest issue with VMWare from a $COST$ perspective is that ESXi isn’t necessarily free. VMWare does NOT support SATA, even if you use $800 SATA RAID controllers. It’s either SAS, SCSI, Fiber Channel or iSCSI. The last time I checked, none of those options were cheap. (neither is an $800 controller, but with $800 worth of SATA drives, you get FAR more storage for the buck.) One of the nice things about virtual, distributed environments, is that when you use COTS hardware platforms, you can really start to scale. But when you drop a heavy hardware requirement in the mix, it ultimately bites back at you.

Something else to consider when asking “why not ESXi” might be that ESXi won’t install on all hardware. If it’s not supported on the HCL (http://www.vmware.com/resources/compatibility/search.php?action=base&deviceCategory=server) you’ll get an error preventing install. Also, using ESXi might limit the manageability of your server (no supported sshd enabled by default, no SNMP, may not be able to run any of your proprietary management tools, etc). I was going to use ESXi until it wouldn’t install on whatever PowerEdge I had on me at the time

to previous commenter Matt Dees:

No, it’s not FreeBSD that’s slow; it’s VMWare.

No matter the platform, VMWare is the slowest virtualization solution there is. Try VirtualBox and you’ll see those I/O meters and that CPU overhead shape up.

I’m a systems integrator with heavy VMware experience, but don’t call me a zealot. VMware gives crappy margins on resale, the only motivation to push the product to clients is because its excellent when deployed correctly.

The setup you describe suffered from a few issues, none of which were VMware’s fault.

As you already know, FreeBSD (though it will work) is not a product that is well suited to virtualization (of any kind). If you can switch to a platform with better support (eg. Linux) you’ll see massive improvements in overall memory usage and CPU. VMware will even let you share userland segments across VMs if they match, something Jails can never do.

The second issue I saw that comes to mind is you mention vmware server. This product is a complete hack and running a core vmware hasn’t tuned much since their early days (more than 5 years ago). It’s the wrong product for ANY production environment.

As has been previously mentioned. VMware ESXi is a vastly superior product. (or ESX if you need the extra management provided with virtualcenter). You can get near bare-metal CPU performance. Better than bare metal memory management. And you can see disk i/o of exactly the same you’d see on bare metal.

VMware has it’s own filesystem called VMFS that lets you perform SAN-like snapshots and allows you to migrate a running machine across hardware. If you’re looking for maximum performance it also lets you use real disk partitions.

Lastly, obviously you are i/o bound. Has work been done trying to isolate jobs per-spindle or utilizing a backend SAN to get a spindle multiplier effect (dozens of spindles are very fast).

I’ve worked with NFS in several situations and nothing I’ve done with it I would consider fast or light. It eats resources and is a non-stop headache.

I don’t think VMware was the wrong product — this was a total botch job.

Yeah, apples and oranges. You can’t compare the old free version of VMware Server against it’s current free offering ESXi. Also, there are several considerations for your storage performance also. Presumably you would put your product into production on a SAN with fiber channel if you were expecting such performance hits and you would also leverage the paravirtualization that VMware has in their VM setups and you would also try NPIV or just RDM access of an NFS share rather than use a vmdk on VMFS to increase performance. Another go around might be in your future if you are serious about using this in production and looking for HA and DRS capabilities. If you run two servers (say app to DB server) across a virtual switch you can run up to the speed of the processor clock cycles and end up around 2.5Gb/s because you are not traversing a physical NIC (since network speeds in the virtual switch are limited to CPU clock cycles).Good luck tith your next round of testing.

For the price ($0), i would absolutely look into Xen for virtualization. And since it paravirtualizes, its performance can be significantly better than even ESX in I/O and CPU tasks.

We are a VMWare ESX shop that hosts ~1000 customers on a Citrix Farm, and after a performance analysis and comparison, we are now migrating completely to Xen for performance, open access and manageability.

I can’t honestly beleive you were using VMware Server instead of a version of the ESX platform. I don’t think you would have had the same issues. ESX 4.0 just came out with WAY better I/O and CPU performance (especially with the new Intel CPU’s that have better virtualization capabilities). VMware Server is great for Development environments etc… not for production systems with big I/O needs. Also ESX is bare metal hypervisor. (kinda different/faster)

Sain0rs says:

June 2, 2009 at 4:45 am

Sain0rs’ comment:

“ESXi won’t install on all hardware. If it’s not supported on the HCL … you’ll get an error preventing install.”

is not true. It just means that that tech support will not help you if the they think the problem is with the hardware.

@ Alexey Parshin

I believe the proper response to what you claim about SQLite would be: “bollox”.

Its DML subset outperforms MySQL and PostgreSQL in any test, any environment – please show me an example of current versions that state otherwise.

Its access concurency suffers no limitations I am aware with (as having used SQLite since early version 1) – again, show an example stating otherwise.

“Very” limited SQL support? “Very” limited because it does not have MySQL specific commands? Oh, gosh…

It is intended as a very bare-bone DBMS solution for optimal performance; backup functionality has no place in it. If you don’t know how to dump and rerun, then you are quite possibly in the wrong field of work.

Sorry, sir, but you are pulling your all of your statements regarding SQLite out of your rear.

We ditched VMware server for XEN, and have been much happier with it. We haven’t run any performance tests, but it seems at least as fast as VMware, with much easier configuration (editing text files), starting/stopping/upgrading are easier as well (we use Debian, so a simple ‘apt-get date/dist-upgrade’ does the trick), and we have more flexibility with the raw disk formats.

Not only that, XEN leaves less files in the virtual folders, which is also nicer. Plus we can configure VM’s to use 4 CPU cores instead of just two.

These might seem like nit-picking things, but it’s certainly made running virtual machines more manageable.

To the original poster, what host OS did you use to host your guest FreeBSD server?

Thanks

Ubuntu 8.04 LTS.

I enjoyed the article. I enjoyed many of the comments. It is true that using VMware Server (any version) will perform more poorly than ESX and/or ESXi.

What I think some of the comments point out is that so many people have a bias toward full machine virtualization and against OS or container type virtualization.

While the situation in this article is flawed in several ways that doesn’t mean that FreeBSD Jails aren’t appropriate for certain use cases especially when dealing with trying to virtualize FreeBSD.

On Linux, as mentioned by several commenters, there is Linux-VServer and OpenVZ… and in the not-too-distant future there will be Linux Native Containers.

I’ve used VMware Server, VirualBox, ESX (co-workers actually), KVM, Linux-VServer and OpenVZ. If I’m doing Linux on Linux virtualization and I don’t have any special needs that OpenVZ can’t handle, I use OpenVZ. Even though it is OS / container type virtualization it still offers some fancy features like checkpointing and live migration AND it doesn’t require any special SAN storage.

I don’t certainly claim that OpenVZ is the solution in every use case but for many of mine, it definitely is.

I wish people would stop dissing OS / container (and Jails too) type virtualization and thinking everything must be solved with very expensive hardware, SAN storage and VMware ESX/ESXi. While I’m sure there are man use cases where that is a fantastic solution it doesn’t mean that others can’t find perfectly good solutions with other products.

I’m currently testing ESXi 3.5 patch 4 on an AMD Operton with 16GB Mem and SAS drives. It’s fully supported on Vmware’s HCL. I was planning on trying ESXi 4.0 next but all this talk of XEN has me intrigued. I’m one to use the best products no matter who makes them.

What is the best host OS as far as support community for XEN? I was planning on using CentOS since it’s pretty close to RedHat.

Things I didn’t like about ESXi is the VIClient is only Windows (I believe there is a MAC beta right now) and some of the backup stuff you have to buy, most of which can be scripted.

Just want to test drive all the “cars” before I decide.

Thanks for any help in advance.

You will suffer lots of overhead with VMware Server it’s not bare metal. If you’re going cheap, which you seem to be, try ESXi, it’s free and it’s baremetal.

Good luck

Sounds like most of the folks here would have been little help to the original poster. They needed a cheap and fast way to get their systems up and running and the advice from people here seems to come down to:”spend 50K on hardware and 10K a week on my engineering expertise and you will be fine”.

This is not a bank or a brokerage firm replicating data that needs to be audited or used by lawyers in multi-million dollar court cases, it’s a small startup trying to make it easy for people to transfer email. For the commenter who stated SQLite is only useful for storing configuration information: what makes you think that isn’t what they are doing? Solaris uses SQLite for this sort of thing and it is “in production” lots of places.

William Golden Wilkins,

The host OS was Ubuntu Server of a recent release. I realize I didn’t provide a lot of hardware details here but the point is that on the same hardware jails did better for us in our particular use case.

You mention investing in fancier hardware but with our current situation I don’t see the need. We can scale beautifully with our jails solution on commodity hardware, it seems.

Matt Hamilton,

We had those clock skew issues too! Drove us nuts.

VMware Server is not a bare metal hypervisor, all resource requests have to go through the intermediary host operating system. It’s no wonder you had performance issues.

Try vSphere ESXi 4.0, it’s a free download (sans live migration, HA, and DRS support) and installs directly onto the host hardware unlike VMware Server.

ESXi is all well and good, but it doesn’t work at all with SATA except experimentally on a couple of controllers, and even then the deployment notes there all but scream “beware of the leopard”. This makes it a non-starter on mid-range hardware.

Xenserver 5 or ESXi 4 both free.

Thought’s Pro/Cons and experience without the hassles please…

Well, I’ve been running ESX3.5 and ESXi for a while now, and am converting to XenServer5(.5) for a few reasons. Take them for what their worth.

1) XenServer is now free for what used to be the Enterprise version, which includes all the enterprise features. For us, this translated into over $50,000 in VMWare licensing we didn’t have to purchase.

2) XenMotion (aka. vMotion) is free, which makes maintenance on the host servers extremely simple and convenient.

3) The host servers are freely accessible and easy to work with, making them extremely convenient for scripting (not true with ESXi).

4) Performance improvements. We have a variety of workloads, but we saw improvements across the board with CPU and I/O. The most noticeable was for our Citrix Servers (login/logout time decreased, user responsiveness improved, etc). All of this was an apples to apples comparison, using the exact same hardware.

5) A matter of taste, but i like Citrix’s XenCenter tool. Admittedly its not the most feature complete beast out there, but its easier for many basic tasks, and significantly more responsive to boot.

“VMware will even let you share userland segments across VMs if they match, something Jails can never do.” –Matthew Fisch

That does exist in FreeBSD and pretty much everything else in the form of shared libraries. If you use hardlinks (or potentially nullfs/unionfs) to create your jails, the memory usage of your various shared libraries should be shared between the host and all of its jails.

do you think it is a mistake to expose your service as based on the cheapest hardware and software you can find. No support and no experience. Boy do i want to send this bunch some money and my corporate data.

at Mauricev

Sain0rs’ comment:

“ESXi won’t install on all hardware. If it’s not supported on the HCL … you’ll get an error preventing install.”

is not true. It just means that that tech support will not help you if the they think the problem is with the hardware.

That is also not true.

For example we tried to install ESXi on a SuperMicro with a RAID card that was in the HCL…well ESXi could see the disks but as soon as we created a RAID, even RAID0 it would simply not see them.

ESXi seems to be extremely picky with drivers.

That being said, I think VMWare is becoming the Microsoft of Virtualization. It’s extremely expensive…and you can get better product for free…