Our email transfer service YippieMove is essentially software as a service. The customer pays us to run some custom software on fast machines with a lot of bandwidth. We initially picked VMware virtualization technology for our back-end deployment because we desired to isolate individual runs, to simplify maintenance and to make scaling dead easy. VMware was ultimately proven to be the wrong choice for these requirements.

Ever since the launch over a year ago we used VMware Server 1 for instantiating the YippieMove back-end software. For that year performance was not a huge concern because there were many other things we were prioritizing on for YippieMove ’09. Then, towards the end of development we began doing performance work. We switched from a data storage model best described as “a huge pile of files” to a much cleaner sqlite3 design. The reason for this was technical: the email mover process opened so many files at the same time that we’d hit various limits on simultaneously open file descriptors. While running sqlite over NFS posed its own set of challenges, they were not as insurmountable as juggling hundreds of thousands of files in a single folder.

The new sqlite3 system worked great in testing – and then promptly bogged down on the production virtual machines.

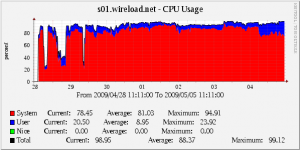

We had heard before that I/O performance and disk performance are the weaknesses of virtualization but we thought we could work around that by putting the job databases on an NFS export from a non virtualized server. Instead the slowness we saw blew our minds. The core servers spent a constant 70% of CPU time with system tasks and despite an uninterrupted 100% CPU usage we could not transfer more than 400KBit/s worth of IMAP traffic per physical machine. This was off by a magnitude from our expected throughput.

Obviously something was wrong. We doubled the amount of memory per server, we quadrupled sqlite’s internal buffers, we turned off sqlite auto-vacuuming, we turned off synchronization, we added more database indexes. These things helped but not enough. We twiddled endlessly with NFS block sizes but that gave nothing. We were confused. Certainly we had expected a performance difference between running our software in a VM compared to running on the metal, but that it could be as much as 10X was a wake-up call.

At this point we realized that no amount of tweaking was likely to get our new sqlite3 version out of its performance hole. The raw performance just wasn’t there. We suspected at least part of the problem was that we were running FreeBSD guests in VMware. We checked that we were using the right network card driver (yes we were). We checked the OS version – 7.1, yep that one was supposedly the best you could get for VMware. We tuned various sysctl values according to guides we found online. Nothing helped.

We had the ability to switch to a more VM friendly client OS such as Ubuntu and hope it would improve performance. But what if that wouldn’t resolve the situation? That’s when FreeBSD jails came up.

Jails are a sort of lightweight virtualization technique available on the FreeBSD platform. They are like a chroot environment on steroids where not only the file system is isolated out but individual processes are confined to a virtual environment – like a virtual machine without the machine part. The host and the jails use the same hardware but the operating system puts a clever disguise on the hardware resources to make the jail seem like its own isolated system.

Since nobody could think of an argument against using jails we gave them a shot. Jails feature all the things we wanted to get out of VMware virtualization:

- Ease of management: you can pack up a whole jail and duplicate it easily

- Isolation: you can reboot a jail if you have to without affecting the rest of the machine

- Simple scaling: it’s easy to give a new instance an IP and get it going

At the same time jails don’t come with half the memory overhead. And theoretically IO performance should be a lot better since there was no emulated harddrive.

And sure enough, system CPU usage dropped by half. That CPU time was immediately put to good use by our software. And so even that we still ran at 100% CPU usage overall throughput was much higher – up to 2.5MBit/s. Sure there was still space for us to get closer to the theoretical maximum performance but now we were in the right ballpark at least.

More expensive versions of VMware offer process migration and better resource pooling, something we’ll be keen to look into when we grow. It’s very likely our VMware setup had some problems, and perhaps they could have been resolved by using fancier VMware software or porting our software to run in Ubuntu (which would be fairly easy). But why cross the river for water? For our needs today the answer was right in front of us in FreeBSD: jails offer a much more lightweight virtualization solution and in this particular case it was a smash hit performance win.

Author: Alexander Ljungberg Tags: FreeBSD, performance, SQLite, virtual machines, VMware, YippieMove